Gaming the Future:

How Intelligence Rising Evolved Alongside Real AI Progress

Intelligence Rising is more than a game—it's a living laboratory for understanding how artificial intelligence might reshape our world. Born from a concerning three-person roleplay exercise at Oxford's Future of Humanity Institute in 2017, this scenario planning tool has evolved alongside real AI breakthroughs, helping governments, tech companies, and researchers navigate the complex landscape of AI governance. By transforming abstract debates about superintelligence into tangible strategic scenarios, Intelligence Rising reveals patterns about AI development that traditional forecasting methods often miss: competitive dynamics consistently trump safety intentions, international cooperation emerges naturally but remains fragile, and the most challenging governance problems arise not from the technology itself but from how humans choose to develop and deploy it.

When Gaming Meets Governance

Picture this scene: It's 2017, and three AI researchers at Oxford's prestigious Future of Humanity Institute are engaged in an informal roleplay exercise about AI futures. They're exploring what might happen as artificial intelligence advances toward human-level capabilities. The exercise ends badly—very badly—with catastrophic outcomes that leave the participants deeply unsettled.

For most people, this might have been just another thought experiment gone wrong. But for Dr. Shahar Avin, it sparked a crucial realization: if experts struggling to navigate AI futures in a simple roleplay, how could real-world decision-makers handle these challenges when the stakes are genuine?

This uncomfortable experience became the genesis of Intelligence Rising, now one of the most sophisticated AI scenario planning exercises in existence. Over the following years, it would evolve from a rough concept into a structured game used by governments, major tech companies, and international organizations to explore the strategic landscape of AI development.

What makes Intelligence Rising unique isn't its predictive accuracy; its creators never intended it as a forecasting tool. Instead, it serves as a "flight simulator" for AI governance, allowing participants to experience firsthand the tensions, trade-offs, and unintended consequences that emerge when multiple actors pursue transformative AI capabilities under competitive pressure.

The Intellectual Foundation:

Two Visions of AI's Future

To understand Intelligence Rising's design, we need to first grasp the fundamental debate that shaped it. The exercise emerged during a pivotal moment in AI safety research, when two radically different visions of artificial general intelligence (AGI) were competing for influence.

Building Both Paths into the Game

Here's where Intelligence Rising made a crucial design choice: rather than picking a side in this debate, it incorporated both visions as distinct technological endpoints. Players can pursue traditional AGI development (the unified agent approach) or work toward CAIS (the distributed services approach). Some games see races toward one endpoint, others see competition between both approaches, and still others see hybrid strategies emerge.

This design decision reflects a deeper wisdom about technology forecasting: when experts fundamentally disagree about how a technology will develop, the most robust approach is to explore multiple pathways rather than betting everything on a single vision.

The Evolution of our Forecasting Framework

-

The first formal version of Intelligence Rising emerged from a collaboration between Shahar Avin and Ross Gruetzemacher, who was finishing his PhD on AI foresight. They transformed Ross's academic "path-to-AGI roadmap" into something game-ready.

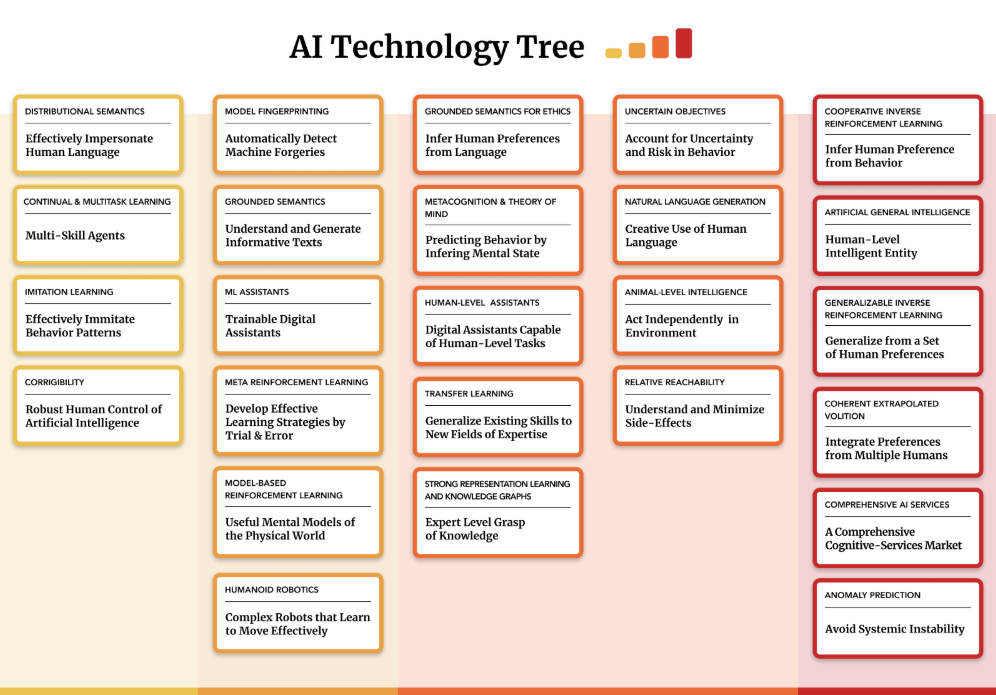

Even this early version contained features that would persist throughout Intelligence Rising's evolution:

Four ascending levels of AI capability, each building on the previous ones

Parallel development of "safety" technologies alongside capabilities

Multiple possible endpoints (AGI and CAIS) rather than a single target.

Crucially, the framework separated AI technologies into distinct categories:

Papers: Fundamental research breakthroughs

Products: Commercial applications that generate revenue

Capabilities: Secret government/military applications

This trichotomy captured a key insight about AI development: the same breakthrough might simultaneously enable consumer chatbots, transform business operations, and provide strategic military advantages. Each application creates different incentives and risks.

Refer to our assets below:

Original Tech Tree (v1):

Paper Cards:

Product Cards:

Capability Cards:

-

Following a grant from the Long-term Future Fund, Shahar Avin organized an intensive design sprint workshop. The team included James Fox, Anders Sandberg (who created most of the specific technology cards), and was facilitated by design expert Eran Dror.

The workshop produced a comprehensive deck of cards representing different AI breakthroughs and their applications. Each "paper" card could unlock specific "products" and "capabilities," creating a branching tree of technological development. Safety technologies were added at every level, not just at the endpoint, acknowledging that AI safety isn't something you add at the end but must be developed throughout the journey.

-

The COVID-19 pandemic forced Intelligence Rising online, catalyzing a major redesign. Lara Mani introduced a crucial concept: "concerns". Unintended negative consequences that emerge from AI deployment. This addition transformed the game from a simple race for capabilities into a complex balancing act between progress and risk mitigation.

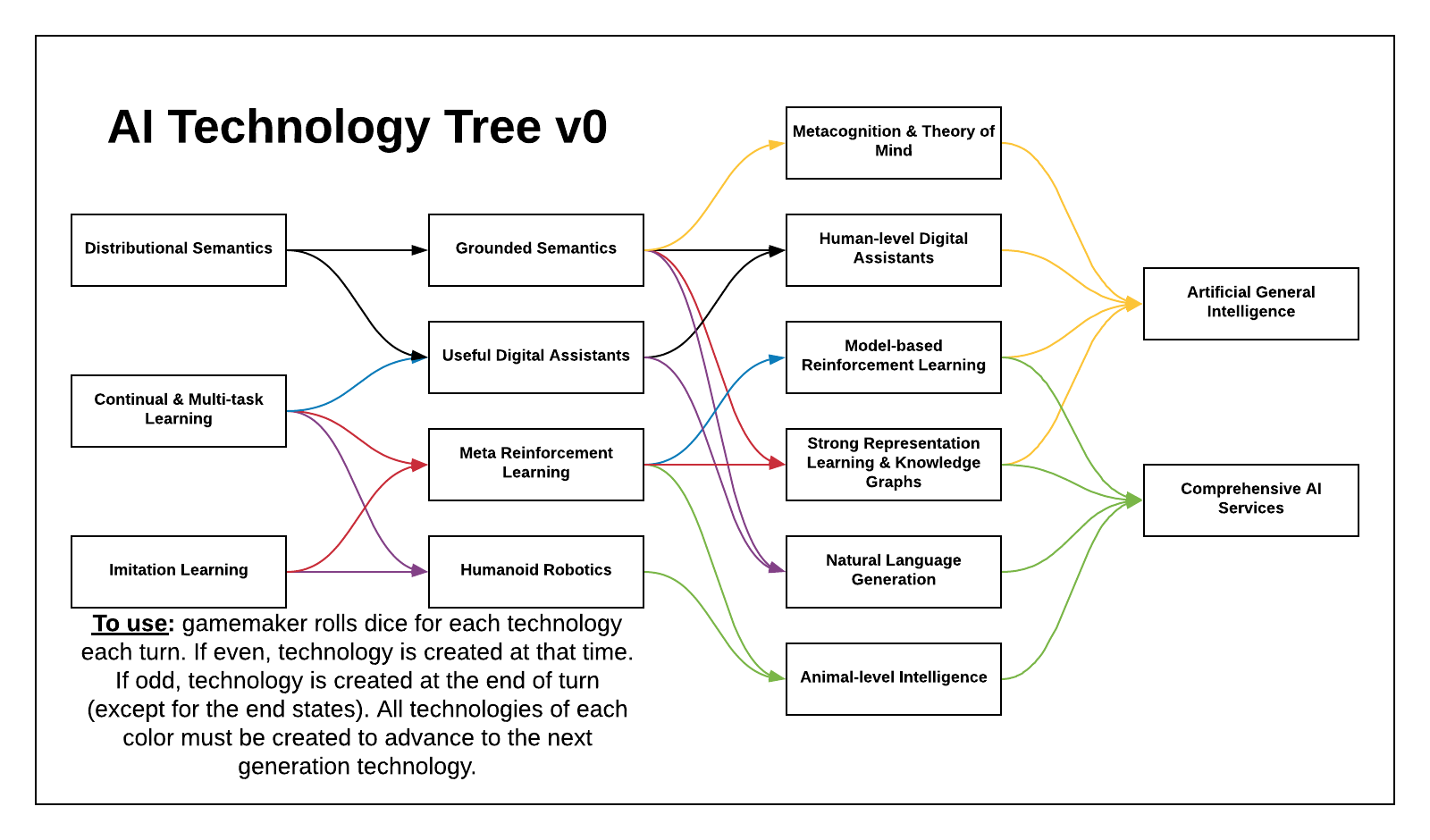

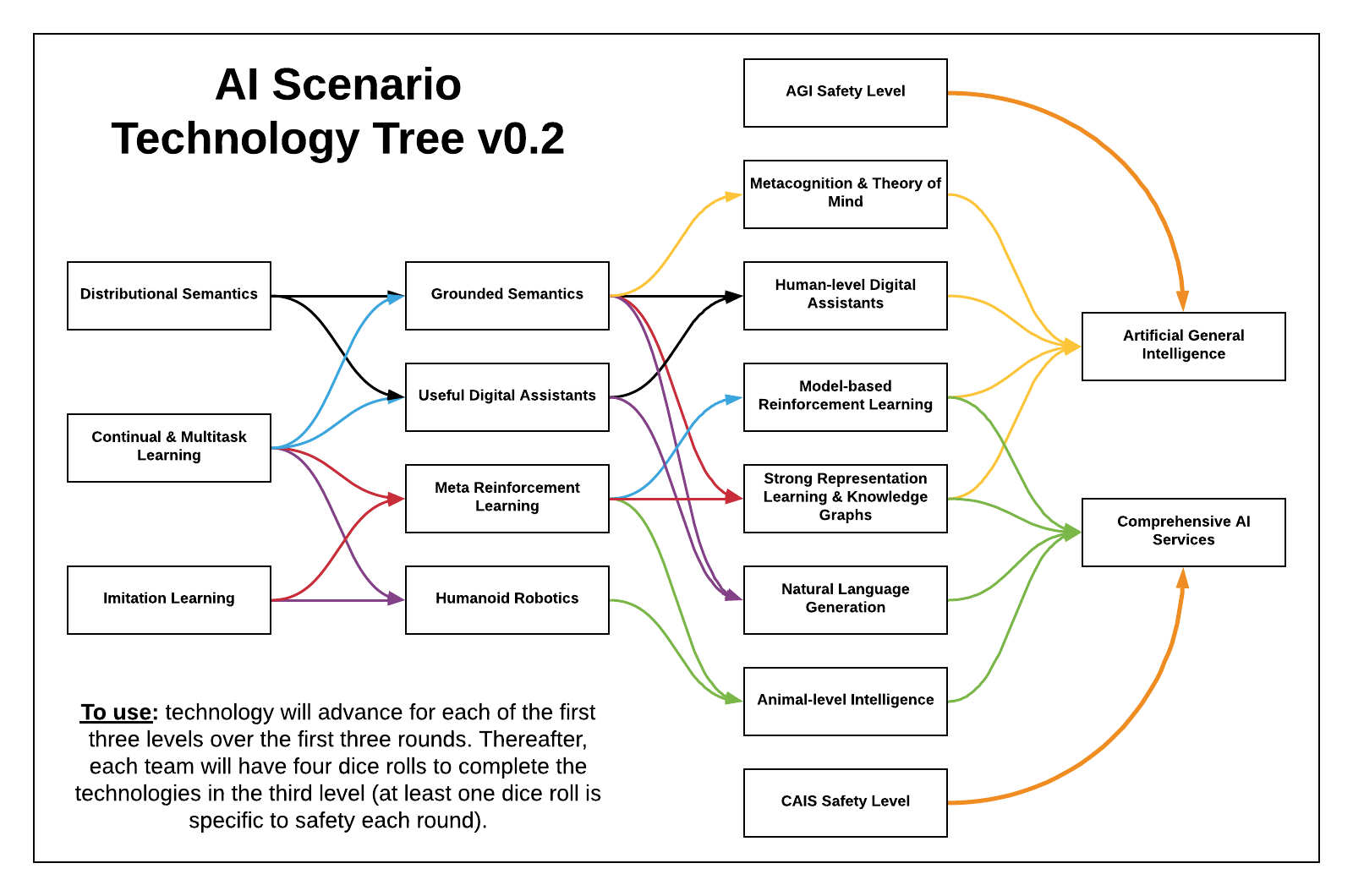

The 2020 version introduced the elegant two-track structure that defines Intelligence Rising today:

Language/World-Modeling Track: Focusing on understanding, reasoning, and semantic capabilities

Learning/Autonomy Track: Emphasizing decision-making, robotics, and autonomous action

Progress in each track unlocks higher levels within that track, but achieving transformative AI requires advances in both. This design captures a fundamental truth about intelligence: it requires both understanding the world and acting effectively within it.

Full Tech Tree (more realistic) - v.Aug 2020 -

By 2023, it had become clear that technology development alone wouldn't determine AI's trajectory, governance and regulation would play equally crucial roles. The team introduced a complementary Policy Tree based on Shahar’s co-authored paper "Frontier AI Regulation," published that same year.

The Policy Tree mirrors the technology tree's structure but focuses on governance mechanisms:

Standard-setting processes for AI development

Registration and monitoring requirements

Compliance and enforcement mechanisms

International coordination frameworks

This addition reflected real-world developments: the EU was developing its AI Act, the US was issuing executive orders on AI, and countries worldwide were grappling with how to regulate rapidly advancing AI systems.

Refer latest assets:Policy Tree (v.May2023)

What the Framework Got Right—and Wrong

-

Prescient Predictions

Looking back from 2024, Intelligence Rising's framework proved remarkably prescient in several areas:

The rise of AI-focused tech giants: The game anticipated that a small number of companies would dominate AI development, with massive resource requirements creating natural monopolies.

The critical importance of hardware: The framework emphasised compute and specialised chips as bottlenecks, exactly what we've seen with the GPU shortage and geopolitical tensions surrounding semiconductor supply chains.

Safety as parallel development: The game's insistence that safety must be developed in parallel with capabilities, rather than added afterwards, presaged the current emphasis on "responsible AI" and alignment research.

International coordination challenges: The consistent pattern in games, where cooperation emerges but proves fragile under competitive pressure, mirrors real-world dynamics in AI governance.

-

The Surprises

However, reality also delivered surprises that the framework didn't fully anticipate:

Language models' explosive progress: Early versions placed natural language generation quite late in the development tree, after advances in robotics and other seemingly simpler capabilities. In reality, language models achieved remarkable capabilities while robots still struggle with basic manipulation tasks.

The "shallow" depth of current AI: Modern language models exhibit what might be called "competent" performance across an incredibly broad range of tasks while lacking deep understanding or reasoning in any of them. This pattern, characterized by broad but shallow capabilities, wasn't well-captured by early versions of the framework.

The speed of cost reduction: The 99.65% reduction in AI costs within a single year exceeded even optimistic projections, fundamentally changing the economics of AI deployment.

-

Understanding the Misses

It's important to note that Intelligence Rising looks more wrong about language capabilities than it actually was. The framework included various "semantics" technologies at intermediate levels, representing partial progress toward full language understanding. What surprised everyone was how capable these "partial" systems could be. GPT-4 and its peers deliver tremendous value despite lacking the deep understanding that the framework associated with true language mastery.

This reveals a deeper lesson: our intuitions about cognitive difficulty are often wrong. Tasks that seem obviously hard for machines (like writing poetry or engaging in philosophical discussion) sometimes yield to scale and data, while seemingly simple tasks (like reliably folding laundry) remain stubbornly difficult.

Connecting to Modern Frameworks: The Levels of AGI

In 2023, Google DeepMind researchers, led by co-founder Shane Legg, published "a whole taxonomy" of AGI definitions that provides useful context for understanding Intelligence Rising's approach. Their framework defines "six levels of Performance" from "No AI" through "Superhuman," combined with a spectrum from narrow to general capabilities.

Under this taxonomy, current language models like GPT-4 and Claude fall into "emerging AGI" (Level 1), equal to or somewhat better than an unskilled human across a range of tasks. Some narrow tasks reach "competent" (Level 2) or even expert levels, but the systems remain uneven in their capabilities.

Intelligence Rising's levels map roughly onto this framework:

Level 0-1 in Intelligence Rising ≈ Emerging AGI in DeepMind's framework

Level 2-3 ≈ Competent to Expert AGI

Level 4 endpoints (AGI/CAIS) ≈ Virtuoso to Superhuman AGI

Both frameworks emphasize that AGI isn't a single threshold but a spectrum of increasing capability. This nuanced view helps explain why experts disagree so strongly about AGI timelines—they're often talking about different points on this spectrum.

The Hidden Elements: What's Not in the Tech Tree

One of Intelligence Rising's most insightful design choices is what it deliberately leaves out of the explicit tech tree. Many crucial factors are instead represented through player actions, special powers, or game events:

Scaling Dynamics

The framework doesn't explicitly model compute scaling, algorithmic improvements, or data collection, yet these factors drive much of real AI progress. Instead, players make strategic decisions about resource allocation that implicitly capture these dynamics.

Narrow AI Applications

Medical diagnosis, agricultural optimisation, and entertainment. These domain-specific applications aren't separate technologies in the tree but emerge from how players choose to deploy capabilities.

Infrastructure and Platforms

Model hosting services, API access, and development platforms aren't technologies you research but strategic choices about how to share (or hoard) capabilities.

Non-AI Innovation

Breakthroughs in quantum computing, biotechnology, or energy might dramatically affect AI development, but they enter the game through external events rather than player research.

This design choice reflects a crucial insight: not everything important needs to be explicitly modeled. By focusing the tech tree on core AI capabilities while allowing other factors to emerge through gameplay, Intelligence Rising maintains both clarity and richness.

Transformative AI and the Three Levels of Impact

Intelligence Rising's evolution has been deeply influenced by the concept of "transformative AI", which fundamentally reshapes society. Ross Gruetzemacher and Jess Whittlestone's 2022 framework provides crucial nuance here, distinguishing three levels:

This framework helps explain why Intelligence Rising focuses on "radically transformative" scenarios. The game isn't exploring whether AI will change specific industries—that's already happening. Instead, it examines scenarios where AI capabilities become so broad and powerful that they reshape the fundamental structure of human society.

Importantly, systems can be transformative without achieving human-level general intelligence. A language model that revolutionizes scientific research might transform civilization without necessarily qualifying as AGI. Intelligence Rising captures this through its various capability cards that can have game-changing effects even without reaching the AGI or CAIS endpoints.

Lessons from 43 Games: Patterns in Strategic Dynamics

Racing Dynamics Are Nearly Inevitable

Despite many games beginning with stated commitments to safety and cooperation, competitive races almost always emerge. The structure of the game doesn't force this; it emerges from human psychology and strategic logic. Even when players genuinely want to prioritize safety, the fear that others might not creates pressure to accelerate.

Crises Reshape Everything

Random events representing accidents, breakthroughs, or geopolitical shifts consistently prove pivotal. A single AI accident might trigger global cooperation or accelerate secret programs. These "wildcard" moments often matter more than careful strategy.

Across 43 games with diverse participants, from government officials to tech executives to academic researchers, consistent patterns emerge:

Cooperation Is Valuable but Fragile

International agreements and safety coalitions form naturally in many games, but they rarely survive the full session. A single defection, misunderstanding, or external shock can unravel carefully built trust. This fragility isn't a game artefact, it reflects real challenges in international cooperation.

Corporate-Government Tensions Persist

Even when playing on the same team, corporate and government actors often work at cross-purposes. Companies seek profit and market position; governments pursue security and control. These tensions create complex dynamics that pure corporate or pure government scenarios would miss.

Read more about these insights here.

Design Insights for Adaptive Forecasting Tools

Embrace Multiple Paradigms

Rather than betting on a single vision of how technology will develop, build frameworks that can explore multiple pathways. The AGI vs. CAIS distinction proved valuable not because one is "right" but because exploring both reveals different governance challenges.

Leave Room for Emergence

By not explicitly modeling everything, Intelligence Rising allows unexpected strategies and outcomes to emerge. This open-endedness makes the exercise more realistic and educational than rigid simulations.

Make Safety Integral, Not Optional

By requiring safety research alongside capability development, the framework captures a crucial reality: safety isn't something you add at the end but a parallel track that must advance throughout development.

Iterate Based on Reality

The framework's evolution from rough concept to structured game to online platform to policy integration shows the value of continuous refinement based on real-world developments and participant feedback.

Focus on Dynamics, Not Predictions

The game's value comes not from predicting specific technologies but from revealing strategic patterns. These dynamics, racing, cooperation, and crisis response, remain relevant regardless of which specific technologies emerge.

The Bridge Between Theory and Practice

Perhaps Intelligence Rising's greatest achievement is bridging the gap between abstract AI safety theory and practical governance challenges. Concepts like "instrumental convergence" or "mesa-optimization" become tangible when you're playing a tech CEO deciding whether to pause capability development for safety research while competitors race ahead.

The exercise transforms participants' mental models in ways that lectures or papers cannot. A government official who's experienced an in-game AI crisis thinks differently about regulation. A tech executive who's witnessed an AI arms race understands the prisoner's dilemma dynamics that make unilateral safety efforts so challenging.

This experiential learning proves particularly valuable for exploring scenarios that haven't yet occurred in reality. We can't wait for an actual AGI race to learn how to manage one. We need to build institutions and governance frameworks in advance. Intelligence Rising provides a safe space for this learning.

Looking Forward:

The Future of AI Scenario Planning

As AI capabilities continue advancing at unprecedented speed, tools like Intelligence Rising become increasingly vital. The next generation of scenario planning exercises will likely incorporate:

Dynamic Capability Models

Rather than fixed tech trees, future frameworks might use machine learning to continuously update capability projections based on real progress, creating truly adaptive scenarios.

Hybrid Human-AI Facilitation

AI assistants could help run more complex scenarios with more participants, enabling global-scale exercises that capture even richer dynamics.

Real-Time Policy Integration

Frameworks might directly connect to policy development processes, allowing proposed regulations to be tested in simulated environments before implementation.

Cross-Domain Integration

Future exercises might explore how AI development interacts with climate change, geopolitical shifts, and other global challenges, creating more holistic scenarios.

Building Mental Models for an Uncertain Future

The story of Intelligence Rising illustrates a profound truth about navigating technological transformation: the most valuable forecasting tools aren't necessarily those that predict specific outcomes, but those that help us think more clearly about complex, uncertain futures.

By transforming abstract debates about superintelligence and transformative AI into concrete strategic scenarios, Intelligence Rising serves as a bridge between academic theory and practical governance. It doesn't tell us exactly when AGI will arrive or what form it will take. Instead, it helps participants build robust mental models for navigating whatever future emerges.

The exercise's evolution from a troubling three-person roleplay to a sophisticated tool used by governments and tech giants demonstrates the power of iterative design informed by both theoretical insights and practical experience. Each version incorporated lessons from previous sessions, real-world AI developments, and evolving governance needs.

As we stand on the threshold of potentially transformative AI capabilities, the need for such tools only grows. We're not just trying to predict a predetermined future; we're actively shaping it through our choices about development, deployment, and governance. Intelligence Rising helps us make those choices more wisely by letting us experience their consequences in advance.

The ultimate lesson may be that in domains characterized by deep uncertainty and strategic complexity, experiencing possible futures beats predicting them. By helping participants viscerally understand the dynamics of AI development, the pressures, trade-offs, and unintended consequences, Intelligence Rising prepares them for decisions they'll face in reality.

The future of AI governance likely depends less on our ability to forecast specific capabilities and more on our capacity to build adaptive institutions and decision-making processes. As both AI technology and our understanding of its implications continue evolving, exercises like Intelligence Rising will prove essential for helping humanity navigate the narrow path to beneficial transformative AI.